The SPOC (Software Payload On Chip) project teams study ways to integrate Machine Learning on embedded systems for the space, droning and industrial domains.

Since 2019, the SPOC project has been built around a part-time collaboration between Airbus Defence & Space, Viveris Technologies and IRT Saint Exupéry to make these artificial intelligence techniques and methods industrializable on CPU (Central Processing Unit), GPGPU (General-purpose Processing on Graphic Processing Units) or FPGA (Field Program Based Arrays) based SoC (System On a Chip) platforms.

THE SPOC IDEA

The aim of the project is to implement a medium/large neural network on an on-board image acquisition platform to reduce the raw data transfer rate to the ground.

To date, solutions already exist. However, they are not practically usable as they are too greedy in terms of energy consumption, computing power, volume and even implementation time.

The study carried out by the SPOC project Team has made it possible to find the best compromise between these constraints. How ? By carrying out various measurements (performance, power, quality, relevance, etc.) on two target levels: GPGPUs (with a JETSON board) and FPGAs (with an XILINX Ultrascale board).

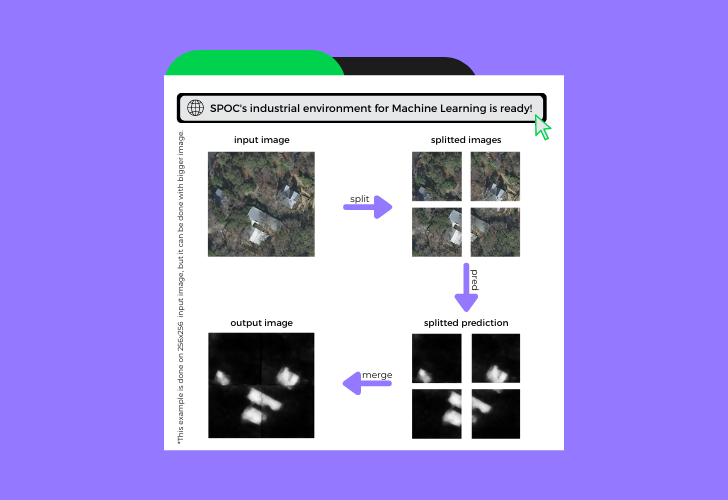

THE DEVELOPMENT ENVIRONMENT

To enable rapid start-up and deployment on future industrial projects, the SPOC team has set up an automated project build flow through Docker and GitLab-CI tools.

Thanks to this environment, developers focus solely on the design phase, software development and target defect correction. By consequence, they are relieved of the tasks of generating executables (binaries and bitsreams) which are automatically produced by the system.

DEVELOPMENT

This study focuses on the evaluation of both GPGPU and FPGA technologies to enable maximum parallelization on the neural network. The developments associated with the GPGPU, were first carried out on a standard PC with an NVIDIA GX3070 graphic card. Here, the SPOC Team deployed the solution with the Tensorflow/Keras Framework coded in Python and carried out the entire model selection and training phase with this same solution. Then, the Team successfully ported this solution to the JETSON AGX XAVIER board.

The next step will be to carry out the entire development in C/C++ language with the cuDNN Framework version 8.0.x or higher. The aim will be to validate an optimisation and to obtain a non-negligible performance gain on the execution of the chosen models.

In parallel, the developments associated with the FPGA were carried out on a ZYNQ UltraScale+ ZCU102 target and the Vivado 2020.1 tool. The FPGAs allows to test different inference methods. To date, various solutions have been studied: the Xilinx DPU (DeepLearning Processor Unit), the VTA (Versatile Tensor Accelerator) and custom accelerators with HLS4ML.

With the emergence of hardware accelerators in data centers and on the edge devices, hardware specialization has played a major role in DeepLearning systems.

The DPU (DeepLearning Processor Unit) technology is a programmable accelerator dedicated to convolutional neural networks. VTA (Versatile Tensor Accelerator) has the advantage, unlike DPU, of being open source.

The next major step will be to measure and validate the different metrics on all the selected targets and to optimise/qualify the models used to complete the project by the end of the first semester of 2022.

Hardware Characteristics of the Solutions

Fixed computer with RTX-3070:

For rapid prototyping in Python with classical frameworks without worrying about space, price and power constraints.

o CPU: i7-11700 8-core 2.9GHz 64-bit CPU

o GPU : 5888-core Ampere GPU with RT & Tensor Cores

o MEM : 4x16GB DDR4 3200 PC25600

o Storage : Kingston 1TB SSD M.2 NVMe

JETSON AGX XAVIER:

For modern industrial projects and to validate a GPU architecture that consumes less power and has a small footprint.

o CPU : 8-core ARM v8.2 64-bit CPU, 8MB L2 + 4MB L3

o GPU: 512-core Volta GPU with Tensor Cores

o MEM : 16GB 256-Bit LPDDR4x | 137GB/s

o Storage : 32GB eMMC 5.1

ZYNQ UltraScale+:

For industrial projects with specific constraints and to validate a less power hungry DPU architecture and a more adapted modularity.

o CPU: quad-core Arm® Cortex®-A53 64-bit CPU

o Logic Cells: 600k

o MEM : PS-4GB DDR4 / PL-512MB DDR4

o Storage : Dual 64MB Quad SPI flash

At the moment of the project, all environments are operational, the learning on the selected models is done and the development of the accelerators for the FPGA as well as the unit operations of the GPGPU are under development and optimization.

The whole SPOC project team thanks its industrial partners: Viveris Technologies, Airbus Defence and Space and IRT Saint Exupéry.