The summer of 2022 saw an unprecedented combination of climatic events in Western Europe. In France in particular, most of the country suffocated under three episodes of heat wave, and many absolute heat records have been broken, with the thermometer reaching 39.3°C in July at the tip of Brittany, in Brest.

Combined with the lack of rain, the heat favored the drought and made the vegetation particularly flammable. The country has experienced unusual fires, such as that of Landiras, in the south of the Gironde, which burned, in two times, more than 20,000 hectares.

In an attempt to respond to climate change and its consequences, the Technological Research Institute IRT Saint Exupéry is studying technological solutions based on artificial intelligence and neural networks that will allow the detection of forest fires from satellites to provide early warnings.

Given the importance of the subject, we have chosen to communicate publicly our results, which have been presented in an article at the CNIA 2022 conference (National Conference on Artificial Intelligence). Exceptionally, we also have decided to publish our datasets, which we are happy to make available to the scientific community below. This work is part of the CIAR project (Chaîne Image Autonome et Réactive) carried out by IRT Saint Exupéry. We would like to thank our industrial and academic partners for their contributions: Thales Alenia Space, Activeeon, Avisto, ELSYS Design, MyDataModels, Geo4i, INRIA, and the LEAT/CNRS.

ABOUT THE CNIA 2022 ARTICLE

“Model and dataset for multi-spectral detection of forest fires on-board satellites”

Abstract

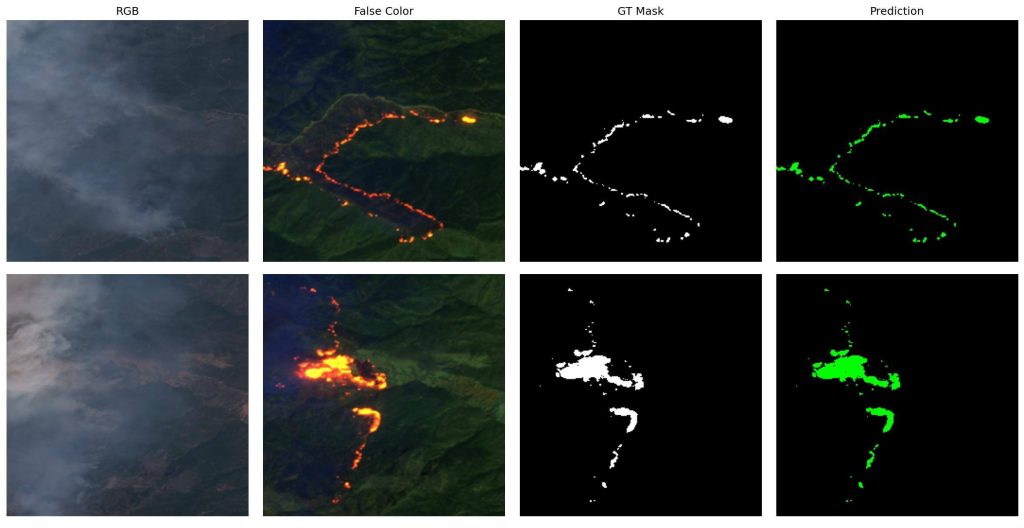

The number of wildfires will likely increase by +50% before the end of the century. In this article, we focus on detecting wildfires onboard satellites to raise early warnings directly from space. In this context, we trained a neural network UNetMobileNetV3 to segment multispectral Sentinel-2 images, which we annotated semi-automatically and verified manually. We then deployed this network on a GPU that can be embedded into a low-orbit satellite.

Authors: Houssem Farhat, Lionel Daniel, Michael Benguigui and Adrien Girard.

More about the CNIA 2022 conference and access to the proceedings: here

ABOUT THE S2WDS (SENTINEL-2 WILDFIRE DATASET)

“In this research, we used a set of 90 scenes from the Sentinel-2 satellites, where the active fires were automatically pre-annotated and then manually corrected.”

Our dataset comes in two versions:

Version 1 (various 40m to 80m GSD):

This is the version described in our CNIA 2022 paper and that should be used to reproduce our results. In this first version, the Sentinel-2 images were downloaded from Sentinel-Hub’s OGC API WCS (Web Coverage Service), and their Ground Sampling Distance (GSD) varies from 40 to 80m. The images were then partitioned into 256×256 pixel patches and distributed among three different sets in order to train, validate and test an Artificial Intelligence reaching a 94% IoU quality.

Version 2 (consistent 20m GSD):

This version of the dataset was produced shortly after the publication of our CNIA 2022 paper. This time, the Sentinel-2 images were downloaded directly from the Copernicus service, at full resolution, and at L1C processing level. Except for benchmarking with our CNIA 2022 results, we recommend this version to be used for future research, as all patches have a consistent 20m GSD across the whole dataset.

You can download both datasets: here

Our CNIA 2022 results (based on S2WDS Version 1) are reproducible via the code available: here

A similar code for S2WDS Version 2 (not part of our CNIA 2022 paper) is available: here

ABOUT CIAR PROJECT

“The CIAR project (Chaîne Image Autonome et Réactive, Responsive and Autonomous Imaging Chain) studies the technologies that enable the deployment of Artificial Intelligence for image processing onboard embedded systems (satellites, delivery drones, etc.).”

For this, three main technical challenges are addressed. The CIAR project is:

- Identifying use cases, and building large databases with relevant labeled images.

- Designing Artificial Intelligence that is both performant and well-suited to embedded systems constraints.

- Optimizing the selected algorithms, embedding them in hardware targets, and assessing their results.

For several years, the CIAR team has been collaborating with ESA (the European Space Agency) on the OPS-SAT mission. On March 22, 2021, the team accomplished two space premieres:

- Remotely updating, from a ground station, an artificial neural network embedded in a satellite.

- Running this neural network on an FPGA (Field-Programmable Gate Array) in orbit.

More about these space premieres: here

KEY INFORMATION

Key numbers

Duration of the project: 5 years (June 2018 – June 2023)

Budget: 6.5M € (partially financed by the PIA)

Members: 8

Industrial members

Thales Alenia Space, Activeeon, Avisto, ELSYS Design, MyDataModels, Geo4i

Academic members

Governmental actors

ABOUT THE ENVIRONMENTAL IMPACT OF THIS STUDY

The environmental impact of this study is difficult to measure, but it is important to know the order of magnitude. By evaluating only the carbon impact of the power consumption driven by the training of our Artificial Intelligence, the authors estimate to have emitted about 2kgCO2. This is about 3000 times lower than their carbon footprint, which shall be divided by 5 by 2050 to cope with the +2°C of the Paris Agreement. Even if the entire team of authors is aware of the current environmental situation, it is important to know that this project aims to contribute to the environmental balance of our planet. Indeed, by quickly detecting wildfires from space, a satellite could directly alert nearby humans and thus save lives, fauna, and flora.